Transition Matrix

We introduce a novel traffic control strategy that applies Markovian traffic assignment framework to dynamic traffic assignment. Markovian framework is a promising approach to improve the efficiency of traffic management, combining theory and learning for large scale system routing.

Transition matrices are used in Markovian traffic assignment framework to derive traffic flow allocation and only the flow split ratios at each intersection are required for traffic assignment. Consequently, the amount of variables required for routing behavior modeling is much smaller, which makes it efficient and convenient to extend the traffic control strategy to larger system.

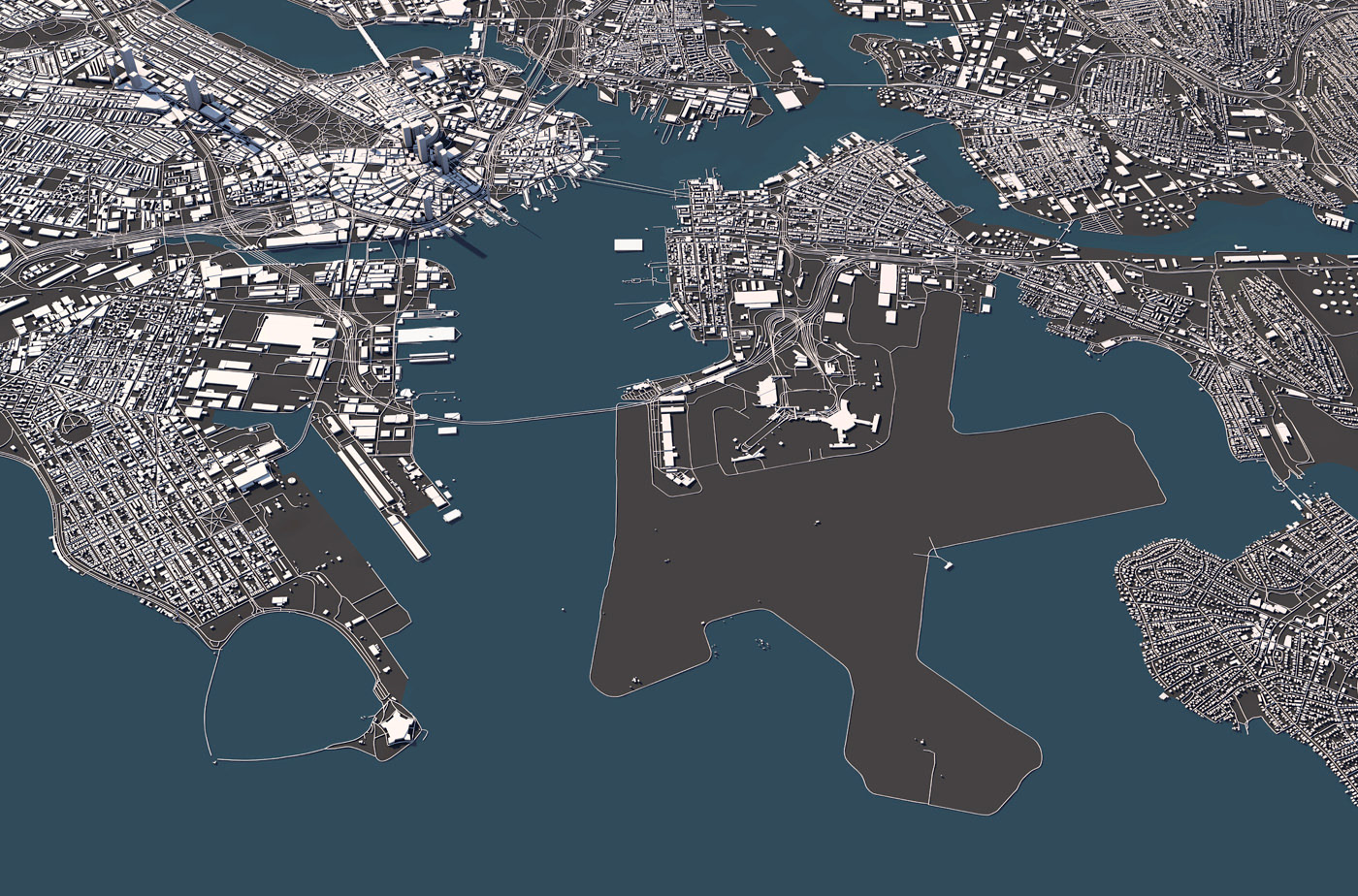

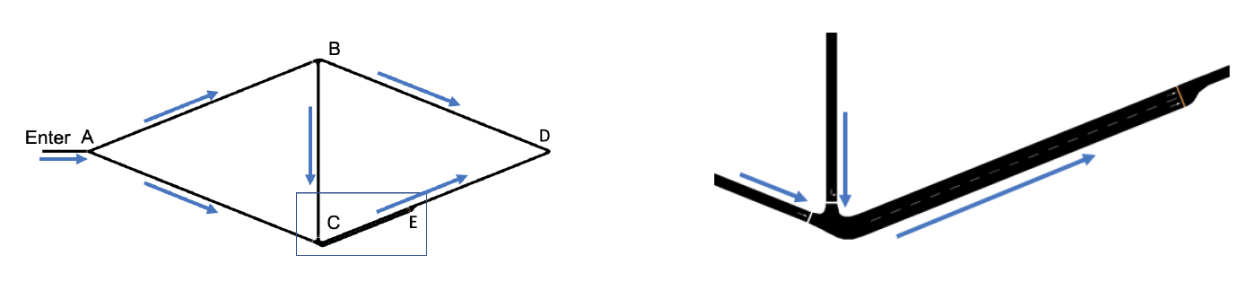

As a case study, we applied the framework to a standard benchmark diagram which is simulated in Simulation for Urban Mobility (SUMO). A screenshot of the SUMO network setup is shown below on the left. The blue arrows are added to visualize the direction of traffic flow. A zoom-in window detailing the merge dynamics at C is shown below on the left: the vehicles from the two lanes take turns to merge in a “first come, first served” basis.

The optimal routing behaviors are independently learned through grid search, random search, and evolution strategies, under three different reward functions (network outflow, total vehicle hours of travel, and average marginal regret).

Grid Search

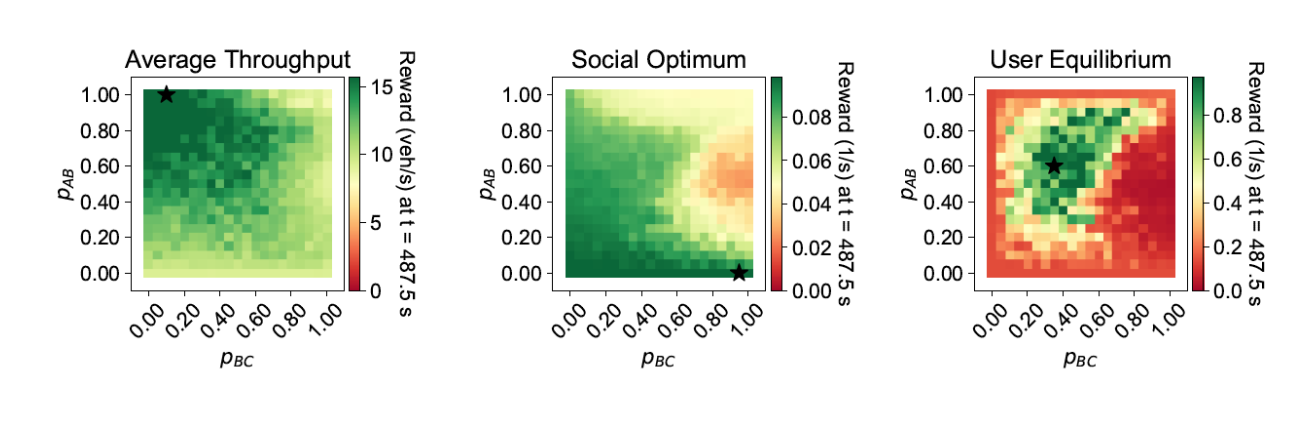

The

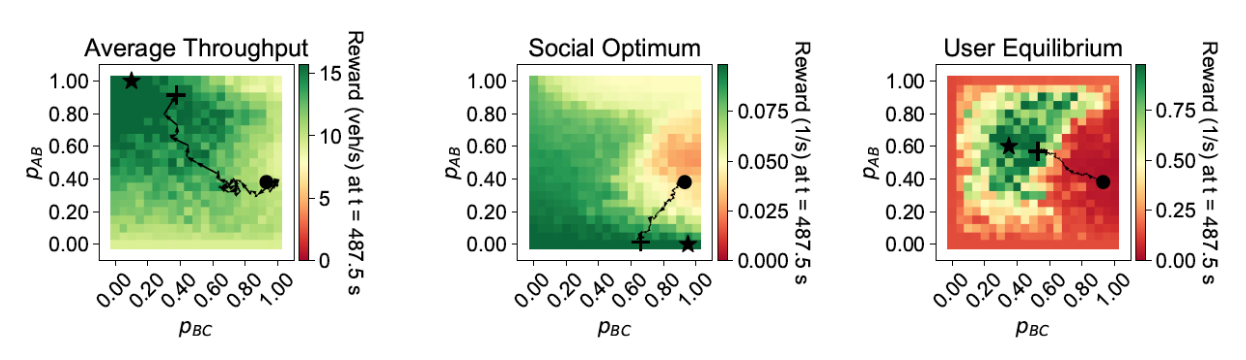

video

shows the time-varying distribution of each reward function on the meshgrid of PAB and PBC throughout the simulation. From left to right, three graphs respectively correspond to reward functions for average outflow, inverse of vehicle hours of travel and inverse of average marginal regret.

At time 487.5s, when the network has converged to steady state, the point that gives the highest reward is marked as “★” for each reward function.

Random Search

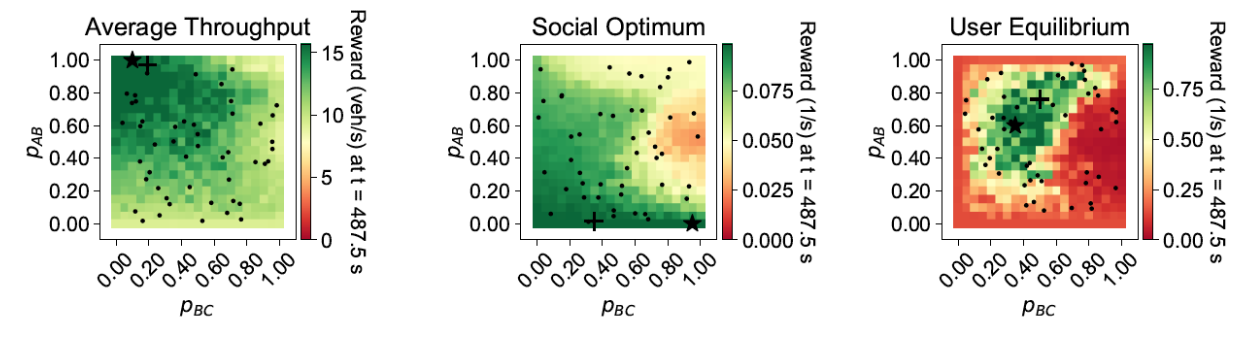

50 sample points (black dots) are picked to measure the reward function and the optimal point that gives the highest reward is marked by “+” symbol. For comparison, the solution found by grid search is marked by “★” symbol.

Evolution Strategies

Evolution strategies first randomly pick a starting point and then start climbing uphill with gradient ascent demonstrated by the black trace. The algorithm is manually terminated after 50 iterations at location marked by “+” symbol. For comparison, the solution found by grid search is marked by “★” symbol.

In practice, if the dimension of the problem is small, all of the three methods are good choices. Grid search method is exhaustive but it doesn’t scale well with the dimension of the problem. For large problems, random search or evolution strategies should be used instead. For problems where it is possible to efficiently compute the exact gradient, vanilla gradient ascent methods may be used instead. However, random search and evolution strategies can be easily parallelized across a large cluster of nodes, which may find a good solution with higher efficiency. In conclusion, by introducing the Markov property of traffic system (eg. a traveller’s decision about the next link to take is not affected by his/her previous path), the Markovian framework reduces the number of variables to compute in the static traffic assignment. This notion is further extended to dynamic traffic assignment using the micro-simulator SUMO. On a benchmark network, the simulator learns optimal routing behavior by finding optimal flow split ratios at every node using grid search, random search, and evolution strategies. The results show the ability of the Markovian framework to learn optimal routing behavior using SUMO.

This work can lead to several applications: 1. Use the transition matrix framework to perform traffic estimation through data assimilation using regression with cross-sectional data. 2. By learning optimal transition matrices, decentralized traffic control strategies may be developed. 3. With the application of Markov chain theory and graph theory, the resiliency of traffic network can be better understood. 4. it’s possible to consider the time-varying transition matrices in which the split ratio at each timestep may be learned through reinforcement learning. 5. For multi-OD network, it’s possible to learn a transition matrix for each destination.